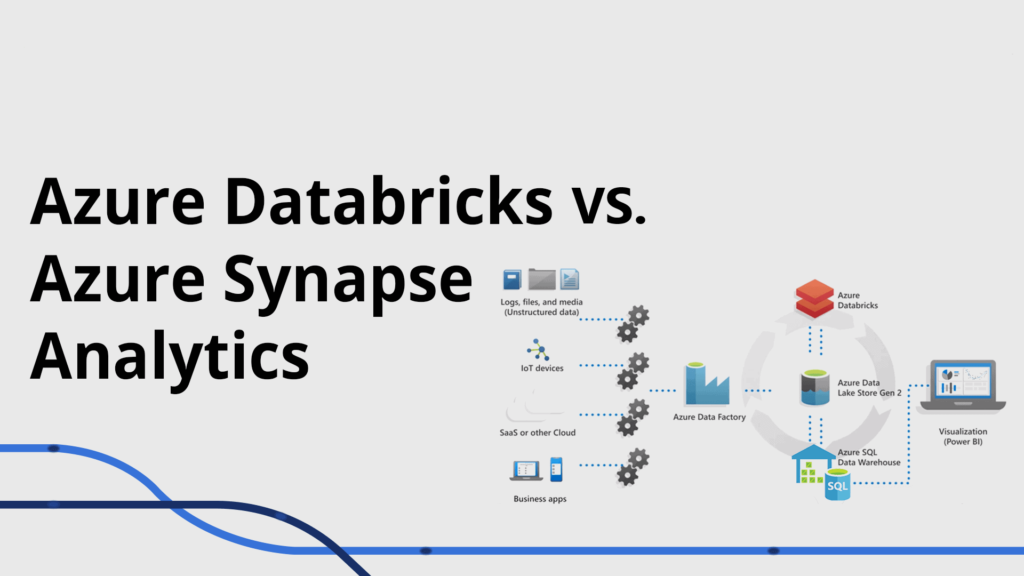

If you are looking for a cloud-based service to handle your data processing and analysis needs, you might have come across two popular options: Azure Databricks and Azure Synapse.

Both of them are part of the Microsoft Azure ecosystem and provide different capabilities for working with big data. But how do they compare, and which one should you choose for your specific scenario?

In this article, we will explore the features and use cases of Azure databricks vs azure synapse.

What is Azure Databricks?

Azure Databricks is a fast, scalable, and collaborative analytics platform that is based on Apache Spark, an open-source analytics engine.

It provides a fully managed and optimized environment for processing and analyzing large volumes of data.

You can use various languages such as Python, Scala, R, SQL, and Java to write your code and run it on clusters of virtual machines that are automatically provisioned and scaled by Azure Databricks.

You can also use notebooks to interactively explore your data, visualize it, and share it with others. Azure Databricks is designed for data engineering, data science, machine learning, artificial intelligence, and streaming workloads.

It has tight integration with other Azure services such as Azure Data Lake Storage, Azure Cosmos DB, Azure ML, Power BI, and more.

It also supports advanced features such as Delta Lake, which is a storage layer that adds reliability and performance to your data lake;

MLflow, which is an open-source platform for managing the end-to-end machine learning lifecycle, and Git integration for version control and collaboration.

What is Azure Synapse?

Azure Synapse is an integrated analytics service that combines enterprise data warehousing, big data processing, and data integration into a single platform.

It has deep integration with other Azure services such as Power BI, Cosmos DB, ML, and more. It provides two main components: a dedicated SQL pool and a Spark pool.

The dedicated SQL pool is the enterprise data warehousing feature of Azure Synapse. It allows you to store your data in relational tables with a columnar storage format that reduces storage costs and improves query performance.

You can use SQL to query your data at massive scale and leverage features such as distributed query processing, column store indexes, partitioning, caching, compression, encryption, etc.

Spark pool is the big data processing feature of Azure Synapse. It allows you to run Spark applications on clusters of virtual machines that are automatically provisioned and scaled by Azure Synapse.

You can use various languages such as Python, Scala, SQL, R, and .NET to write your code and run it on Spark Pool. You can also use notebooks to interactively explore your data, visualize it, and share it with others.

Azure Synapse also provides a serverless SQL tool that enables you to query data from various sources, such as Azure Data Lake Storage, Cosmos DB, Blob Storage, etc., without provisioning any resources.

You can use standard SQL syntax to query your data on demand and pay only for the resources you consume.

Azure Databricks vs Azure Synapse: Comparison

The following table summarizes some of the key differences between Azure Databricks and Azure Synapse:

| Feature | Azure Databricks | Azure Synapse |

| Data processing engine | Apache Spark | Dedicated SQL pool or Spark pool |

| Data storage format | Delta Lake or Parquet | Relational tables or Parquet |

| Data ingestion | Auto Loader or Spark APIs | Copy activity or Spark APIs |

| Data transformation | Spark APIs or SQL | SQL or Spark APIs |

| Data analysis | Notebooks or BI tools | Notebooks or BI tools |

| Data visualization | Built-in charts or BI tools | Built-in charts or BI tools |

| Machine learning | MLlib or MLflow or Azure ML | MLlib or MLflow or Azure ML |

| Streaming | Structured Streaming or Kafka | Structured Streaming or Kafka |

| Serverless option | No | Yes (Serverless SQL pool) |

| Git integration | Yes | No |

Azure Databricks vs. Azure Synapse: Use Cases

Depending on your data analytics needs, you might prefer one service over the other.

Here are some common scenarios where you might want to use either Azure Databricks or Azure Synapse:

If you need a traditional data warehouse with SQL capabilities and high performance, you might want to use Azure Synapse with a dedicated SQL pool.

You can store your data in relational tables and query it using SQL with features such as distributed query processing, column store indexes, partitioning, caching, compression, encryption, etc.

You can also use Power BI to create dashboards and reports on your data.

If you need a data lake with reliability and performance, you might want to use Azure Databricks with Delta Lake.

You can store your data in Parquet files and query it using Spark APIs or SQL with features such as ACID transactions, schema enforcement, time travel, upserts, deletes, etc.

You can also use notebooks to explore your data and visualize it using built-in charts or BI tools.

If you need a data engineering platform with scalability and flexibility, you might want to use Azure Databricks with Spark APIs.

You can ingest, transform, and process your data using various languages such as Python, Scala, R, SQL, Java, etc.

Also, you can use Auto Loader to automatically load streaming or batch data from various sources into Delta Lake or Parquet files.

You can also use MLlib or MLflow to build and manage your machine learning models.

A comparison of Azure Databricks and Azure Synapse, two cloud-based services that offer different capabilities for data processing and analysis.